Sometimes you might want certain parts of your website to remain private or hidden away from search results. This is common for pages that may contain sensitive information on your site, such as admin pages.

You may also want to exclude pages that provide no value to your audience, such as category pages and even pages with duplicate content (which can create a host of problems for search engines). Whatever your reasons are for hiding certain parts of your website, there are a number of ways you can achieve this:

Use a Robots.txt file

This first method should NOT be used for pages with sensitive information. There are two key reasons for this, firstly some rogue search engines will completely ignore this file and crawl the site anyway. And secondly, users can still view the webpage in their browser if they know the URL. In some rare cases, Google may even still show the page in the search results, especially if there are other websites linking to that page or if Google thinks it’s highly relevant to the user’s search query.

To see if your website has a robots.txt file already, you can use Google’s robot.txt tester tool. This tool will also show which pages on your site are blocked and which ones crawlers can access. You can also view your website’s robots.txt file by entering “/robots.txt” in the address bar after your site’s domain (like the screenshot below):

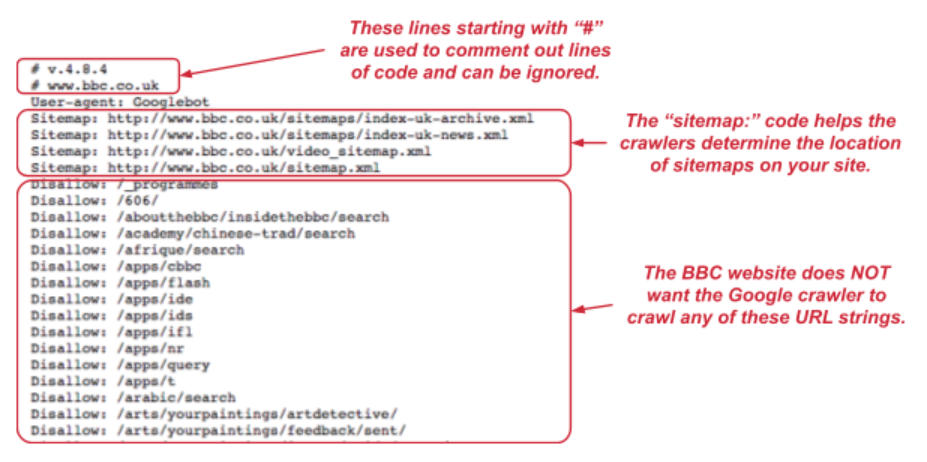

At the most minimum a robots.txt file should include these two lines of text:

User-agent: [user-agent name]Disallow: [URL string not to be crawled]

The user agent part refers to a specific crawler which you are giving instructions to, such as Googlebot or Bingbot. If you want to refer to all crawlers, you can use the * symbol. And the ‘Disallow’ part refers to the part of your website you want to block from crawlers. You can leave this blank if you want crawlers to access all your pages.

Below is a snippet of the robots.txt file used on the BBC website. Please note, including the sitemap part is not required, as you can add your sitemap in search console.

You can even see this creative example of a robots.txt file used on our site. It is important to also know that not all websites need a robots.txt file. If your site does not have one, it just means that all crawlers like Googlebot have full access to crawl your website.

It is important to correctly format your robots.txt file, as an incorrect file can block Googlebot from accessing your website. Here are some best practices for creating a good robots.txt file:

- Always name your file “robots.txt” (all in lowercase).

- Do not use robots.txt to block web pages with sensitive, private information (see next section).

- Always block internal search results pages on your site from being crawled.

- Always add the robots.txt file to the main directory or root domain, so crawlers can easily find it.

- Ensure your robots.txt is not blocking access to javascript, CSS and image files used on your website, as this could prevent Google from viewing your content.

Please note, if you are using a CMS like WordPress, then it’s worth checking if it automatically generates a robots.txt file for your website, if it does not have one. You can override this file by creating and uploading your own robots.txt file to your site’s root directory. For more information on creating a robots.txt file for your website, please see Google’s guidelines.

Hiding Sensitive Pages on Your Website

As already mentioned, robots.txt is not a good method of hiding pages with sensitive information, such as customer details or even confidential company details. Instead it would be best to password protect these pages or even remove the page/s of your site completely. You can even use the noindex tag, if you do not want a page appearing in search engines, but still want users to access it. If you are worried about private pages appearing in the Google search results you can ask an SEO expert or a developer about hiding these pages.